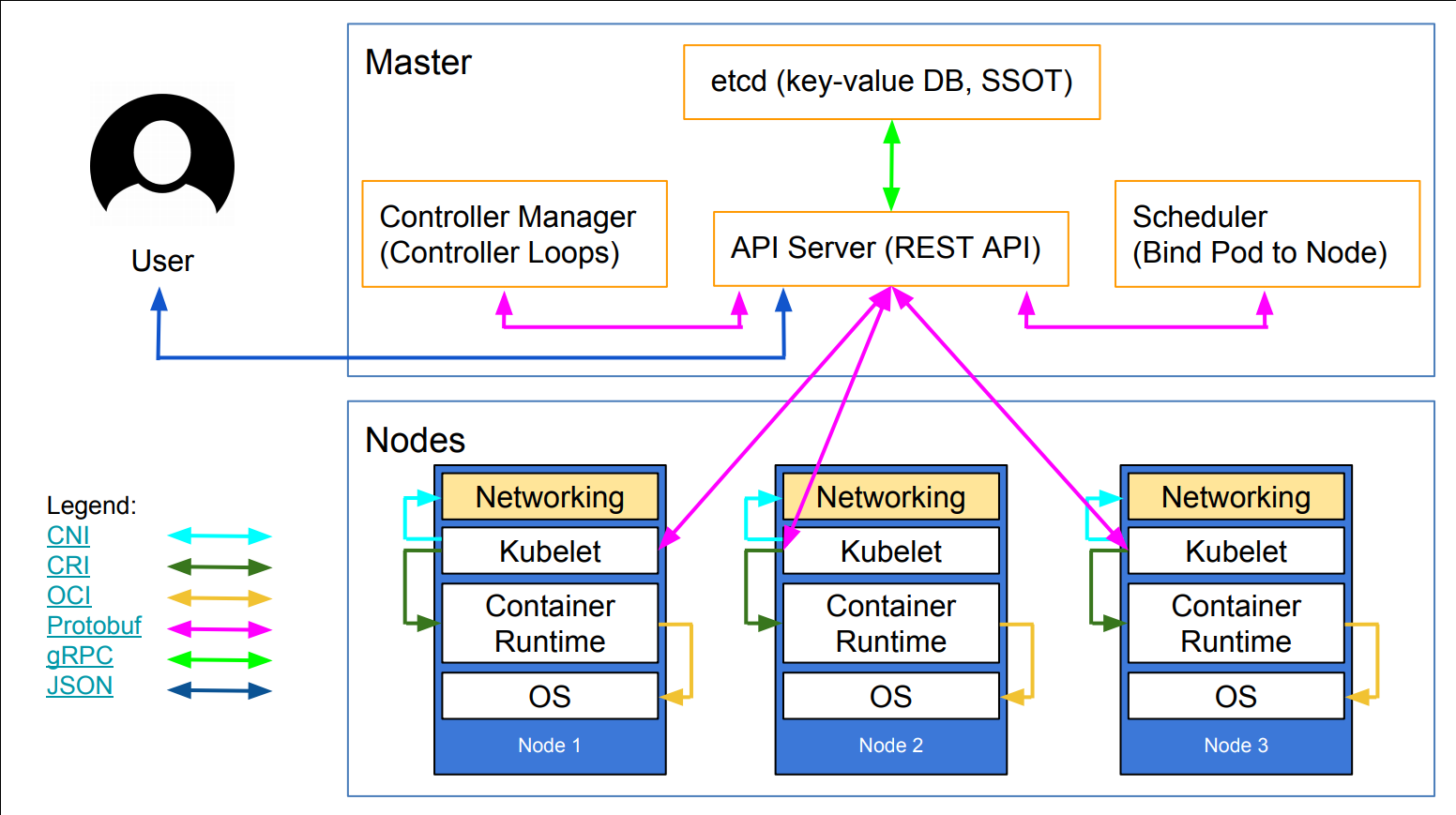

class: title Deep Dive into<br/>Kubernetes Internals<br/>for Builders and Operators<br/><br/>(LISA2019 tutorial)<br/> .footnote[☝🏻 Slides!] .debug[ ``` ``` These slides have been built from commit: ae9780f [lisa/begin.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/lisa/begin.md)] --- ## Outline - Introductions - Kubernetes anatomy - Building a 1-node cluster - Connecting to services - Adding more nodes - What's missing .debug[[lisa/begin.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/lisa/begin.md)] --- class: title Introductions .debug[[lisa/begin.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/lisa/begin.md)] --- class: tutorial-only ## Viewer advisory - Have you attended my talk on Monday? -- - Then you may experience *déjà-vu* during the next few minutes (Sorry!) -- - But I promise we'll soon build (and break) some clusters! .debug[[lisa/begin.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/lisa/begin.md)] --- ## Hi! - Jérôme Petazzoni ([@jpetazzo](https://twitter.com/jpetazzo)) - 🇫🇷🇺🇸🇩🇪 - 📦🧔🏻 - 🐋(📅📅📅📅📅📅📅) - 🔥🧠😢💊 ([1], [2], [3]) - 👨🏻🏫✨☸️💰 - 😄👍🏻 [1]: http://jpetazzo.github.io/2018/09/06/the-depression-gnomes/ [2]: http://jpetazzo.github.io/2018/02/17/seven-years-at-docker/ [3]: http://jpetazzo.github.io/2017/12/24/productivity-depression-kanban-emoji/ ??? I'm French, living in the US, with also a foot in Berlin (Germany). I'm a container hipster: I was running containers in production, before it was cool. I worked 7 years at Docker, which according to Corey Quinn, is "long enough to be legally declared dead". I also struggled a few years with depressed and burn-out. It's not what I'll discuss today, but it's a topic that matters a lot to me, and I wrote a bit about it, check my blog if you'd like. After a break, I decided to do something I love: teaching witchcraft. I deliver Kubernetes training. As you can see, I love emojis, but if you don't, it's OK. (There will be much less emojis on the following slides.) .debug[[lisa/begin.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/lisa/begin.md)] --- ## Why this talk? - One of my goals in 2018: pass the CKA exam -- - Things I knew: - kubeadm - kubectl run, expose, YAML, Helm - ancient container lore -- - Things I didn't: - how Kubernetes *really* works - deploy Kubernetes The Hard Way .debug[[lisa/begin.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/lisa/begin.md)] --- ## Scope - Goals: - learn enough about Kubernetes to ace that exam - learn enough to teach that stuff - Non-goals: - set up a *production* cluster from scratch - build everything from source .debug[[lisa/begin.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/lisa/begin.md)] --- ## Why are *you* here? -- - Need/want/must build Kubernetes clusters -- - Just curious about Kubernetes internals -- - The Zelda theme -- - (Other, please specify) -- class: tutorial-only .footnote[*Damn. Jérôme is even using the same jokes for his talk and his tutorial!<br/>This guy really has no shame. Tsk.*] .debug[[lisa/begin.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/lisa/begin.md)] --- class: title TL,DR .debug[[lisa/begin.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/lisa/begin.md)] --- class: title *The easiest way to install Kubernetes is to get someone else to do it for you.* (Me, after extensive research.) ??? Which means that if any point, you decide to leave, I will not take it personally, but assume that you eventually saw the light, and that you would like to hire me or some of my colleagues to build your Kubernetes clusters. It's all good. .debug[[lisa/begin.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/lisa/begin.md)] --- class: talk-only ## This talk is also available as a tutorial - Wednesday, October 30, 2019 - 11:00 am–12:30 pm - Salon ABCD - Same content - Everyone will get a cluster of VMs - Everyone will be able to do the stuff that I'll demo today! .debug[[lisa/begin.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/lisa/begin.md)] --- class: title The Truth¹ About Kubernetes .footnote[¹Some of it] .debug[[lisa/begin.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/lisa/begin.md)] --- ## What we want to do ```bash kubectl run web --image=nginx --replicas=3 ``` *or* ```bash kubectl create deployment web --image=nginx kubectl scale deployment web --replicas=3 ``` *then* ```bash kubectl expose deployment web --port=80 curl http://... ``` ??? Kubernetes might feel like an imperative system, because we can say "run this; do that." .debug[[lisa/begin.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/lisa/begin.md)] --- ## What really happens - `kubectl` generates a manifest describing a Deployment - That manifest is sent to the Kubernetes API server - The Kubernetes API server validates the manifest - ... then persists it to etcd - Some *controllers* wake up and do a bunch of stuff .footnote[*The amazing diagram on the next slide is courtesy of [Lucas Käldström](https://twitter.com/kubernetesonarm).*] ??? In reality, it is a declarative system. We write manifests, descriptions of what we want, and Kubernetes tries to make it happen. .debug[[lisa/begin.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/lisa/begin.md)] --- class: pic  ??? What we're really doing, is storing a bunch of objects in etcd. But etcd, unlike a SQL database, doesn't have schemas or types. So to prevent us from dumping any kind of trash data in etcd, We have to read/write to it through the API server. The API server will enforce typing and consistency. Etcd doesn't have schemas or types, but it has the ability to watch a key or set of keys, meaning that it's possible to subscribe to updates of objects. The controller manager is a process that has a bunch of loops, each one responsible for a specific type of object. So there is one that will watch the deployments, and as soon as we create, updated, delete a deployment, it will wake up and do something about it. .debug[[lisa/begin.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/lisa/begin.md)] --- ## 19,000 words They say, "a picture is worth one thousand words." The following 19 slides show what really happens when we run: ```bash kubectl run web --image=nginx --replicas=3 ``` .debug[[k8s/deploymentslideshow.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/k8s/deploymentslideshow.md)] --- class: pic  .debug[[k8s/deploymentslideshow.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/k8s/deploymentslideshow.md)] --- class: pic  .debug[[k8s/deploymentslideshow.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/k8s/deploymentslideshow.md)] --- class: pic  .debug[[k8s/deploymentslideshow.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/k8s/deploymentslideshow.md)] --- class: pic  .debug[[k8s/deploymentslideshow.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/k8s/deploymentslideshow.md)] --- class: pic  .debug[[k8s/deploymentslideshow.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/k8s/deploymentslideshow.md)] --- class: pic  .debug[[k8s/deploymentslideshow.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/k8s/deploymentslideshow.md)] --- class: pic  .debug[[k8s/deploymentslideshow.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/k8s/deploymentslideshow.md)] --- class: pic  .debug[[k8s/deploymentslideshow.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/k8s/deploymentslideshow.md)] --- class: pic  .debug[[k8s/deploymentslideshow.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/k8s/deploymentslideshow.md)] --- class: pic  .debug[[k8s/deploymentslideshow.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/k8s/deploymentslideshow.md)] --- class: pic  .debug[[k8s/deploymentslideshow.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/k8s/deploymentslideshow.md)] --- class: pic  .debug[[k8s/deploymentslideshow.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/k8s/deploymentslideshow.md)] --- class: pic  .debug[[k8s/deploymentslideshow.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/k8s/deploymentslideshow.md)] --- class: pic  .debug[[k8s/deploymentslideshow.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/k8s/deploymentslideshow.md)] --- class: pic  .debug[[k8s/deploymentslideshow.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/k8s/deploymentslideshow.md)] --- class: pic  .debug[[k8s/deploymentslideshow.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/k8s/deploymentslideshow.md)] --- class: pic  .debug[[k8s/deploymentslideshow.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/k8s/deploymentslideshow.md)] --- class: pic  .debug[[k8s/deploymentslideshow.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/k8s/deploymentslideshow.md)] --- class: pic  .debug[[k8s/deploymentslideshow.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/k8s/deploymentslideshow.md)] --- class: title Let's get this party started! .debug[[lisa/env.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/lisa/env.md)] --- class: pic  .debug[[lisa/env.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/lisa/env.md)] --- ## Everyone gets their own cluster - Everyone should have a little printed card - That card has IP address / login / password for a personal cluster - That cluster will be up for the duration of the tutorial (but not much longer, alas, because these cost $$$) .debug[[lisa/env.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/lisa/env.md)] --- ## How these clusters are deployed - Create a bunch of cloud VMs (today: Ubuntu 18.04 on AWS EC2) - Install binaries, create user account (with parallel-ssh because it's *fast*) - Generate the little cards with a Jinja2 template - If you want to do it for your own tutorial: check the [prepare-vms](https://github.com/jpetazzo/container.training/tree/master/prepare-vms) directory in the training repo! .debug[[lisa/env.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/lisa/env.md)] --- ## Exercises - Labs and exercises are clearly identified .exercise[ - This indicate something that you are invited to do - First, let's log into the first node of the cluster: ```bash ssh docker@`A.B.C.D` ``` (Replace A.B.C.D with the IP address of the first node) ] .debug[[lisa/env.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/lisa/env.md)] --- ## Slides - These slides are available online .exercise[ - Open this slides deck in a local browser: ```open https://lisa-2019-10.container.training ``` - Select the tutorial link - Type the number of that slide + ENTER ] .debug[[lisa/env.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/lisa/env.md)] --- # Building our own cluster - Let's build our own cluster! *Perfection is attained not when there is nothing left to add, but when there is nothing left to take away. (Antoine de Saint-Exupery)* - Our goal is to build a minimal cluster allowing us to: - create a Deployment (with `kubectl run` or `kubectl create deployment`) - expose it with a Service - connect to that service - "Minimal" here means: - smaller number of components - smaller number of command-line flags - smaller number of configuration files .debug[[k8s/dmuc.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/k8s/dmuc.md)] --- ## Non-goals - For now, we don't care about security - For now, we don't care about scalability - For now, we don't care about high availability - All we care about is *simplicity* .debug[[k8s/dmuc.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/k8s/dmuc.md)] --- ## Checking our environment - Let's make sure we have everything we need first .exercise[ - Get root: ```bash sudo -i ``` - Check available versions: ```bash etcd -version kube-apiserver --version dockerd --version ``` ] .debug[[k8s/dmuc.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/k8s/dmuc.md)] --- ## The plan 1. Start API server 2. Interact with it (create Deployment and Service) 3. See what's broken 4. Fix it and go back to step 2 until it works! .debug[[k8s/dmuc.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/k8s/dmuc.md)] --- ## Dealing with multiple processes - We are going to start many processes - Depending on what you're comfortable with, you can: - open multiple windows and multiple SSH connections - use a terminal multiplexer like screen or tmux - put processes in the background with `&` <br/>(warning: log output might get confusing to read!) .debug[[k8s/dmuc.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/k8s/dmuc.md)] --- ## Starting API server .exercise[ - Try to start the API server: ```bash kube-apiserver # It will fail with "--etcd-servers must be specified" ``` ] Since the API server stores everything in etcd, it cannot start without it. .debug[[k8s/dmuc.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/k8s/dmuc.md)] --- ## Starting etcd .exercise[ - Try to start etcd: ```bash etcd ``` ] Success! Note the last line of output: ``` serving insecure client requests on 127.0.0.1:2379, this is strongly discouraged! ``` *Sure, that's discouraged. But thanks for telling us the address!* .debug[[k8s/dmuc.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/k8s/dmuc.md)] --- ## Starting API server (for real) - Try again, passing the `--etcd-servers` argument - That argument should be a comma-separated list of URLs .exercise[ - Start API server: ```bash kube-apiserver --etcd-servers http://127.0.0.1:2379 ``` ] Success! .debug[[k8s/dmuc.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/k8s/dmuc.md)] --- ## Interacting with API server - Let's try a few "classic" commands .exercise[ - List nodes: ```bash kubectl get nodes ``` - List services: ```bash kubectl get services ``` ] We should get `No resources found.` and the `kubernetes` service, respectively. Note: the API server automatically created the `kubernetes` service entry. .debug[[k8s/dmuc.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/k8s/dmuc.md)] --- class: extra-details ## What about `kubeconfig`? - We didn't need to create a `kubeconfig` file - By default, the API server is listening on `localhost:8080` (without requiring authentication) - By default, `kubectl` connects to `localhost:8080` (without providing authentication) .debug[[k8s/dmuc.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/k8s/dmuc.md)] --- ## Creating a Deployment - Let's run a web server! .exercise[ - Create a Deployment with NGINX: ```bash kubectl create deployment web --image=nginx ``` ] Success? .debug[[k8s/dmuc.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/k8s/dmuc.md)] --- ## Checking our Deployment status .exercise[ - Look at pods, deployments, etc.: ```bash kubectl get all ``` ] Our Deployment is in bad shape: ``` NAME READY UP-TO-DATE AVAILABLE AGE deployment.apps/web 0/1 0 0 2m26s ``` And, there is no ReplicaSet, and no Pod. .debug[[k8s/dmuc.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/k8s/dmuc.md)] --- ## What's going on? - We stored the definition of our Deployment in etcd (through the API server) - But there is no *controller* to do the rest of the work - We need to start the *controller manager* .debug[[k8s/dmuc.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/k8s/dmuc.md)] --- ## Starting the controller manager .exercise[ - Try to start the controller manager: ```bash kube-controller-manager ``` ] The final error message is: ``` invalid configuration: no configuration has been provided ``` But the logs include another useful piece of information: ``` Neither --kubeconfig nor --master was specified. Using the inClusterConfig. This might not work. ``` .debug[[k8s/dmuc.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/k8s/dmuc.md)] --- ## Reminder: everyone talks to API server - The controller manager needs to connect to the API server - It *does not* have a convenient `localhost:8080` default - We can pass the connection information in two ways: - `--master` and a host:port combination (easy) - `--kubeconfig` and a `kubeconfig` file - For simplicity, we'll use the first option .debug[[k8s/dmuc.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/k8s/dmuc.md)] --- ## Starting the controller manager (for real) .exercise[ - Start the controller manager: ```bash kube-controller-manager --master http://localhost:8080 ``` ] Success! .debug[[k8s/dmuc.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/k8s/dmuc.md)] --- ## Checking our Deployment status .exercise[ - Check all our resources again: ```bash kubectl get all ``` ] We now have a ReplicaSet. But we still don't have a Pod. .debug[[k8s/dmuc.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/k8s/dmuc.md)] --- ## What's going on? In the controller manager logs, we should see something like this: ``` E0404 15:46:25.753376 22847 replica_set.go:450] Sync "default/web-5bc9bd5b8d" failed with `No API token found for service account "default"`, retry after the token is automatically created and added to the service account ``` - The service account `default` was automatically added to our Deployment (and to its pods) - The service account `default` exists - But it doesn't have an associated token (the token is a secret; creating it requires signature; therefore a CA) .debug[[k8s/dmuc.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/k8s/dmuc.md)] --- ## Solving the missing token issue There are many ways to solve that issue. We are going to list a few (to get an idea of what's happening behind the scenes). Of course, we don't need to perform *all* the solutions mentioned here. .debug[[k8s/dmuc.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/k8s/dmuc.md)] --- ## Option 1: disable service accounts - Restart the API server with `--disable-admission-plugins=ServiceAccount` - The API server will no longer add a service account automatically - Our pods will be created without a service account .debug[[k8s/dmuc.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/k8s/dmuc.md)] --- ## Option 2: do not mount the (missing) token - Add `automountServiceAccountToken: false` to the Deployment spec *or* - Add `automountServiceAccountToken: false` to the default ServiceAccount - The ReplicaSet controller will no longer create pods referencing the (missing) token .exercise[ - Programmatically change the `default` ServiceAccount: ```bash kubectl patch sa default -p "automountServiceAccountToken: false" ``` ] .debug[[k8s/dmuc.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/k8s/dmuc.md)] --- ## Option 3: set up service accounts properly - This is the most complex option! - Generate a key pair - Pass the private key to the controller manager (to generate and sign tokens) - Pass the public key to the API server (to verify these tokens) .debug[[k8s/dmuc.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/k8s/dmuc.md)] --- ## Continuing without service account token - Once we patch the default service account, the ReplicaSet can create a Pod .exercise[ - Check that we now have a pod: ```bash kubectl get all ``` ] Note: we might have to wait a bit for the ReplicaSet controller to retry. If we're impatient, we can restart the controller manager. .debug[[k8s/dmuc.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/k8s/dmuc.md)] --- ## What's next? - Our pod exists, but it is in `Pending` state - Remember, we don't have a node so far (`kubectl get nodes` shows an empty list) - We need to: - start a container engine - start kubelet .debug[[k8s/dmuc.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/k8s/dmuc.md)] --- ## Starting a container engine - We're going to use Docker (because it's the default option) .exercise[ - Start the Docker Engine: ```bash dockerd ``` ] Success! Feel free to check that it actually works with e.g.: ```bash docker run alpine echo hello world ``` .debug[[k8s/dmuc.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/k8s/dmuc.md)] --- ## Starting kubelet - If we start kubelet without arguments, it *will* start - But it will not join the cluster! - It will start in *standalone* mode - Just like with the controller manager, we need to tell kubelet where the API server is - Alas, kubelet doesn't have a simple `--master` option - We have to use `--kubeconfig` - We need to write a `kubeconfig` file for kubelet .debug[[k8s/dmuc.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/k8s/dmuc.md)] --- ## Writing a kubeconfig file - We can copy/paste a bunch of YAML - Or we can generate the file with `kubectl` .exercise[ - Create the file `~/.kube/config` with `kubectl`: ```bash kubectl config \ set-cluster localhost --server http://localhost:8080 kubectl config \ set-context localhost --cluster localhost kubectl config \ use-context localhost ``` ] .debug[[k8s/dmuc.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/k8s/dmuc.md)] --- ## Our `~/.kube/config` file The file that we generated looks like the one below. That one has been slightly simplified (removing extraneous fields), but it is still valid. ```yaml apiVersion: v1 kind: Config current-context: localhost contexts: - name: localhost context: cluster: localhost clusters: - name: localhost cluster: server: http://localhost:8080 ``` .debug[[k8s/dmuc.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/k8s/dmuc.md)] --- ## Starting kubelet .exercise[ - Start kubelet with that kubeconfig file: ```bash kubelet --kubeconfig ~/.kube/config ``` ] Success! .debug[[k8s/dmuc.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/k8s/dmuc.md)] --- ## Looking at our 1-node cluster - Let's check that our node registered correctly .exercise[ - List the nodes in our cluster: ```bash kubectl get nodes ``` ] Our node should show up. Its name will be its hostname (it should be `node1`). .debug[[k8s/dmuc.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/k8s/dmuc.md)] --- ## Are we there yet? - Let's check if our pod is running .exercise[ - List all resources: ```bash kubectl get all ``` ] -- Our pod is still `Pending`. 🤔 -- Which is normal: it needs to be *scheduled*. (i.e., something needs to decide which node it should go on.) .debug[[k8s/dmuc.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/k8s/dmuc.md)] --- ## Scheduling our pod - Why do we need a scheduling decision, since we have only one node? - The node might be full, unavailable; the pod might have constraints ... - The easiest way to schedule our pod is to start the scheduler (we could also schedule it manually) .debug[[k8s/dmuc.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/k8s/dmuc.md)] --- ## Starting the scheduler - The scheduler also needs to know how to connect to the API server - Just like for controller manager, we can use `--kubeconfig` or `--master` .exercise[ - Start the scheduler: ```bash kube-scheduler --master http://localhost:8080 ``` ] - Our pod should now start correctly .debug[[k8s/dmuc.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/k8s/dmuc.md)] --- ## Checking the status of our pod - Our pod will go through a short `ContainerCreating` phase - Then it will be `Running` .exercise[ - Check pod status: ```bash kubectl get pods ``` ] Success! .debug[[k8s/dmuc.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/k8s/dmuc.md)] --- class: extra-details ## Scheduling a pod manually - We can schedule a pod in `Pending` state by creating a Binding, e.g.: ```bash kubectl create -f- <<EOF apiVersion: v1 kind: Binding metadata: name: name-of-the-pod target: apiVersion: v1 kind: Node name: name-of-the-node EOF ``` - This is actually how the scheduler works! - It watches pods, makes scheduling decisions, and creates Binding objects .debug[[k8s/dmuc.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/k8s/dmuc.md)] --- ## Connecting to our pod - Let's check that our pod correctly runs NGINX .exercise[ - Check our pod's IP address: ```bash kubectl get pods -o wide ``` - Send some HTTP request to the pod: ```bash curl `X.X.X.X` ``` ] We should see the `Welcome to nginx!` page. .debug[[k8s/dmuc.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/k8s/dmuc.md)] --- ## Exposing our Deployment - We can now create a Service associated with this Deployment .exercise[ - Expose the Deployment's port 80: ```bash kubectl expose deployment web --port=80 ``` - Check the Service's ClusterIP, and try connecting: ```bash kubectl get service web curl http://`X.X.X.X` ``` ] -- This won't work. We need kube-proxy to enable internal communication. .debug[[k8s/dmuc.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/k8s/dmuc.md)] --- ## Starting kube-proxy - kube-proxy also needs to connect to the API server - It can work with the `--master` flag (although that will be deprecated in the future) .exercise[ - Start kube-proxy: ```bash kube-proxy --master http://localhost:8080 ``` ] .debug[[k8s/dmuc.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/k8s/dmuc.md)] --- ## Connecting to our Service - Now that kube-proxy is running, we should be able to connect .exercise[ - Check the Service's ClusterIP again, and retry connecting: ```bash kubectl get service web curl http://`X.X.X.X` ``` ] Success! .debug[[k8s/dmuc.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/k8s/dmuc.md)] --- class: extra-details ## How kube-proxy works - kube-proxy watches Service resources - When a Service is created or updated, kube-proxy creates iptables rules .exercise[ - Check out the `OUTPUT` chain in the `nat` table: ```bash iptables -t nat -L OUTPUT ``` - Traffic is sent to `KUBE-SERVICES`; check that too: ```bash iptables -t nat -L KUBE-SERVICES ``` ] For each Service, there is an entry in that chain. .debug[[k8s/dmuc.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/k8s/dmuc.md)] --- class: extra-details ## Diving into iptables - The last command showed a chain named `KUBE-SVC-...` corresponding to our service .exercise[ - Check that `KUBE-SVC-...` chain: ```bash iptables -t nat -L `KUBE-SVC-...` ``` - It should show a jump to a `KUBE-SEP-...` chains; check it out too: ```bash iptables -t nat -L `KUBE-SEP-...` ``` ] This is a `DNAT` rule to rewrite the destination address of the connection to our pod. This is how kube-proxy works! .debug[[k8s/dmuc.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/k8s/dmuc.md)] --- class: extra-details ## kube-router, IPVS - With recent versions of Kubernetes, it is possible to tell kube-proxy to use IPVS - IPVS is a more powerful load balancing framework (remember: iptables was primarily designed for firewalling, not load balancing!) - It is also possible to replace kube-proxy with kube-router - kube-router uses IPVS by default - kube-router can also perform other functions (e.g., we can use it as a CNI plugin to provide pod connectivity) .debug[[k8s/dmuc.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/k8s/dmuc.md)] --- class: extra-details ## What about the `kubernetes` service? - If we try to connect, it won't work (by default, it should be `10.0.0.1`) - If we look at the Endpoints for this service, we will see one endpoint: `host-address:6443` - By default, the API server expects to be running directly on the nodes (it could be as a bare process, or in a container/pod using the host network) - ... And it expects to be listening on port 6443 with TLS .debug[[k8s/dmuc.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/k8s/dmuc.md)] --- # Adding nodes to the cluster - So far, our cluster has only 1 node - Let's see what it takes to add more nodes .debug[[k8s/multinode.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/k8s/multinode.md)] --- ## Next steps - We will need some files that are on the tutorial GitHub repo .exercise[ - Clone the repository containing the workshop materials: ```bash git clone https://container.training ``` ] .debug[[k8s/multinode.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/k8s/multinode.md)] --- ## Control plane - We can use the control plane that we deployed on node1 - If that didn't quite work, don't panic! - We provide a way to catch up and get a control plane in a pinch .debug[[k8s/multinode.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/k8s/multinode.md)] --- ## Cleaning up - Only do this if your control plane doesn't work and want to start over .exercise[ - Reboot the node to make sure nothing else is running: ```bash sudo reboot ``` - Log in again: ```bash ssh docker@`A.B.C.D` ``` - Get root: ``` sudo -i ``` ] .debug[[k8s/multinode.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/k8s/multinode.md)] --- ## Catching up - We will use a Compose file to start the control plane components .exercise[ - Start the Docker Engine: ```bash dockerd ``` - Go to the `compose/simple-k8s-control-plane` directory: ```bash cd container.training/compose/simple-k8s-control-plane ``` - Start the control plane: ```bash docker-compose up ``` ] .debug[[k8s/multinode.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/k8s/multinode.md)] --- ## Checking the control plane status - Before moving on, verify that the control plane works .exercise[ - Show control plane component statuses: ```bash kubectl get componentstatuses kubectl get cs ``` - Show the (empty) list of nodes: ```bash kubectl get nodes ``` ] .debug[[k8s/multinode.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/k8s/multinode.md)] --- class: extra-details ## Differences with the other control plane - Our new control plane listens on `0.0.0.0` instead of the default `127.0.0.1` - The ServiceAccount admission plugin is disabled .debug[[k8s/multinode.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/k8s/multinode.md)] --- ## Joining the nodes - We need to generate a `kubeconfig` file for kubelet - This time, we need to put the public IP address of `kubenet1` (instead of `localhost` or `127.0.0.1`) .exercise[ - Generate the `kubeconfig` file: ```bash kubectl config set-cluster kubenet --server http://`X.X.X.X`:8080 kubectl config set-context kubenet --cluster kubenet kubectl config use-context kubenet cp ~/.kube/config ~/kubeconfig ``` ] .debug[[k8s/multinode.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/k8s/multinode.md)] --- ## Distributing the `kubeconfig` file - We need that `kubeconfig` file on the other nodes, too .exercise[ - Copy `~/.kube/config` to the other nodes (Given the size of the file, you can copy-paste it!) ] .debug[[k8s/multinode.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/k8s/multinode.md)] --- ## Starting kubelet *The following assumes that you copied the kubeconfig file to /tmp/kubeconfig.* .exercise[ - Log into node2 - Start the Docker Engine: ```bash sudo dockerd & ``` - Start kubelet: ```bash sudo kubelet --kubeconfig /tmp/kubeconfig ``` ] Repeat on more nodes if desired. .debug[[k8s/multinode.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/k8s/multinode.md)] --- ## If we're running the "old" control plane - By default, the API server only listens on localhost - The other nodes will not be able to connect (symptom: a flood of `node "nodeX" not found` messages) - We need to add `--address 0.0.0.0` to the API server (yes, [this will expose our API server to all kinds of shenanigans](https://twitter.com/TabbySable/status/1188901099446554624)) - Restarting API server might cause scheduler and controller manager to quit (you might have to restart them) .debug[[k8s/multinode.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/k8s/multinode.md)] --- ## Checking cluster status - We should now see all the nodes - At first, their `STATUS` will be `NotReady` - They will move to `Ready` state after approximately 10 seconds .exercise[ - Check the list of nodes: ```bash kubectl get nodes ``` ] .debug[[k8s/multinode.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/k8s/multinode.md)] --- ## Deploy a web server - Let's create a Deployment and scale it (so that we have multiple pods on multiple nodes) .exercise[ - Create a Deployment running httpenv: ```bash kubectl create deployment httpenv --image=jpetazzo/httpenv ``` - Scale it: ```bash kubectl scale deployment httpenv --replicas=5 ``` ] .debug[[k8s/multinode.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/k8s/multinode.md)] --- ## Check our pods - The pods will be scheduled on the nodes - The nodes will pull the `jpetazzo/httpenv` image, and start the pods - What are the IP addresses of our pods? .exercise[ - Check the IP addresses of our pods ```bash kubectl get pods -o wide ``` ] -- 🤔 Something's not right ... Some pods have the same IP address! .debug[[k8s/multinode.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/k8s/multinode.md)] --- ## What's going on? - Without the `--network-plugin` flag, kubelet defaults to "no-op" networking - It lets the container engine use a default network (in that case, we end up with the default Docker bridge) - Our pods are running on independent, disconnected, host-local networks .debug[[k8s/multinode.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/k8s/multinode.md)] --- ## What do we need to do? - On a normal cluster, kubelet is configured to set up pod networking with CNI plugins - This requires: - installing CNI plugins - writing CNI configuration files - running kubelet with `--network-plugin=cni` .debug[[k8s/multinode.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/k8s/multinode.md)] --- ## Using network plugins - We need to set up a better network - Before diving into CNI, we will use the `kubenet` plugin - This plugin creates a `cbr0` bridge and connects the containers to that bridge - This plugin allocates IP addresses from a range: - either specified to kubelet (e.g. with `--pod-cidr`) - or stored in the node's `spec.podCIDR` field .footnote[See [here] for more details about this `kubenet` plugin.] [here]: https://kubernetes.io/docs/concepts/extend-kubernetes/compute-storage-net/network-plugins/#kubenet .debug[[k8s/multinode.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/k8s/multinode.md)] --- ## What `kubenet` does and *does not* do - It allocates IP addresses to pods *locally* (each node has its own local subnet) - It connects the pods to a *local* bridge (pods on the same node can communicate together; not with other nodes) - It doesn't set up routing or tunneling (we get pods on separated networks; we need to connect them somehow) - It doesn't allocate subnets to nodes (this can be done manually, or by the controller manager) .debug[[k8s/multinode.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/k8s/multinode.md)] --- ## Setting up routing or tunneling - *On each node*, we will add routes to the other nodes' pod network - Of course, this is not convenient or scalable! - We will see better techniques to do this; but for now, hang on! .debug[[k8s/multinode.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/k8s/multinode.md)] --- ## Allocating subnets to nodes - There are multiple options: - passing the subnet to kubelet with the `--pod-cidr` flag - manually setting `spec.podCIDR` on each node - allocating node CIDRs automatically with the controller manager - The last option would be implemented by adding these flags to controller manager: ``` --allocate-node-cidrs=true --cluster-cidr=<cidr> ``` .debug[[k8s/multinode.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/k8s/multinode.md)] --- class: extra-details ## The pod CIDR field is not mandatory - `kubenet` needs the pod CIDR, but other plugins don't need it (e.g. because they allocate addresses in multiple pools, or a single big one) - The pod CIDR field may eventually be deprecated and replaced by an annotation (see [kubernetes/kubernetes#57130](https://github.com/kubernetes/kubernetes/issues/57130)) .debug[[k8s/multinode.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/k8s/multinode.md)] --- ## Restarting kubelet wih pod CIDR - We need to stop and restart all our kubelets - We will add the `--network-plugin` and `--pod-cidr` flags - We all have a "cluster number" (let's call that `C`) printed on your VM info card - We will use pod CIDR `10.C.N.0/24` (where `N` is the node number: 1, 2, 3) .exercise[ - Stop all the kubelets (Ctrl-C is fine) - Restart them all, adding `--network-plugin=kubenet --pod-cidr 10.C.N.0/24` ] .debug[[k8s/multinode.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/k8s/multinode.md)] --- ## What happens to our pods? - When we stop (or kill) kubelet, the containers keep running - When kubelet starts again, it detects the containers .exercise[ - Check that our pods are still here: ```bash kubectl get pods -o wide ``` ] 🤔 But our pods still use local IP addresses! .debug[[k8s/multinode.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/k8s/multinode.md)] --- ## Recreating the pods - The IP address of a pod cannot change - kubelet doesn't automatically kill/restart containers with "invalid" addresses <br/> (in fact, from kubelet's point of view, there is no such thing as an "invalid" address) - We must delete our pods and recreate them .exercise[ - Delete all the pods, and let the ReplicaSet recreate them: ```bash kubectl delete pods --all ``` - Wait for the pods to be up again: ```bash kubectl get pods -o wide -w ``` ] .debug[[k8s/multinode.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/k8s/multinode.md)] --- ## Adding kube-proxy - Let's start kube-proxy to provide internal load balancing - Then see if we can create a Service and use it to contact our pods .exercise[ - Start kube-proxy: ```bash sudo kube-proxy --kubeconfig ~/.kube/config ``` - Expose our Deployment: ```bash kubectl expose deployment httpenv --port=8888 ``` ] .debug[[k8s/multinode.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/k8s/multinode.md)] --- ## Test internal load balancing .exercise[ - Retrieve the ClusterIP address: ```bash kubectl get svc httpenv ``` - Send a few requests to the ClusterIP address (with `curl`) ] -- Sometimes it works, sometimes it doesn't. Why? .debug[[k8s/multinode.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/k8s/multinode.md)] --- ## Routing traffic - Our pods have new, distinct IP addresses - But they are on host-local, isolated networks - If we try to ping a pod on a different node, it won't work - kube-proxy merely rewrites the destination IP address - But we need that IP address to be reachable in the first place - How do we fix this? (hint: check the title of this slide!) .debug[[k8s/multinode.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/k8s/multinode.md)] --- ## Important warning - The technique that we are about to use doesn't work everywhere - It only works if: - all the nodes are directly connected to each other (at layer 2) - the underlying network allows the IP addresses of our pods - If we are on physical machines connected by a switch: OK - If we are on virtual machines in a public cloud: NOT OK - on AWS, we need to disable "source and destination checks" on our instances - on OpenStack, we need to disable "port security" on our network ports .debug[[k8s/multinode.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/k8s/multinode.md)] --- ## Routing basics - We need to tell *each* node: "The subnet 10.C.N.0/24 is located on node N" (for all values of N) - This is how we add a route on Linux: ```bash ip route add 10.C.N.0/24 via W.X.Y.Z ``` (where `W.X.Y.Z` is the internal IP address of node N) - We can see the internal IP addresses of our nodes with: ```bash kubectl get nodes -o wide ``` .debug[[k8s/multinode.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/k8s/multinode.md)] --- ## Firewalling - By default, Docker prevents containers from using arbitrary IP addresses (by setting up iptables rules) - We need to allow our containers to use our pod CIDR - For simplicity, we will insert a blanket iptables rule allowing all traffic: `iptables -I FORWARD -j ACCEPT` - This has to be done on every node .debug[[k8s/multinode.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/k8s/multinode.md)] --- ## Setting up routing .exercise[ - Create all the routes on all the nodes - Insert the iptables rule allowing traffic - Check that you can ping all the pods from one of the nodes - Check that you can `curl` the ClusterIP of the Service successfully ] .debug[[k8s/multinode.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/k8s/multinode.md)] --- ## What's next? - We did a lot of manual operations: - allocating subnets to nodes - adding command-line flags to kubelet - updating the routing tables on our nodes - We want to automate all these steps - We want something that works on all networks .debug[[k8s/multinode.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/k8s/multinode.md)] --- # The Container Network Interface - Allows us to decouple network configuration from Kubernetes - Implemented by *plugins* - Plugins are executables that will be invoked by kubelet - Plugins are responsible for: - allocating IP addresses for containers - configuring the network for containers - Plugins can be combined and chained when it makes sense .debug[[k8s/cni.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/k8s/cni.md)] --- ## Combining plugins - Interface could be created by e.g. `vlan` or `bridge` plugin - IP address could be allocated by e.g. `dhcp` or `host-local` plugin - Interface parameters (MTU, sysctls) could be tweaked by the `tuning` plugin The reference plugins are available [here]. Look in each plugin's directory for its documentation. [here]: https://github.com/containernetworking/plugins/tree/master/plugins .debug[[k8s/cni.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/k8s/cni.md)] --- ## How does kubelet know which plugins to use? - The plugin (or list of plugins) is set in the CNI configuration - The CNI configuration is a *single file* in `/etc/cni/net.d` - If there are multiple files in that directory, the first one is used (in lexicographic order) - That path can be changed with the `--cni-conf-dir` flag of kubelet .debug[[k8s/cni.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/k8s/cni.md)] --- ## CNI configuration in practice - When we set up the "pod network" (like Calico, Weave...) it ships a CNI configuration (and sometimes, custom CNI plugins) - Very often, that configuration (and plugins) is installed automatically (by a DaemonSet featuring an initContainer with hostPath volumes) - Examples: - Calico [CNI config](https://github.com/projectcalico/calico/blob/1372b56e3bfebe2b9c9cbf8105d6a14764f44159/v2.6/getting-started/kubernetes/installation/hosted/calico.yaml#L25) and [volume](https://github.com/projectcalico/calico/blob/1372b56e3bfebe2b9c9cbf8105d6a14764f44159/v2.6/getting-started/kubernetes/installation/hosted/calico.yaml#L219) - kube-router [CNI config](https://github.com/cloudnativelabs/kube-router/blob/c2f893f64fd60cf6d2b6d3fee7191266c0fc0fe5/daemonset/generic-kuberouter.yaml#L10) and [volume](https://github.com/cloudnativelabs/kube-router/blob/c2f893f64fd60cf6d2b6d3fee7191266c0fc0fe5/daemonset/generic-kuberouter.yaml#L73) .debug[[k8s/cni.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/k8s/cni.md)] --- class: extra-details ## Conf vs conflist - There are two slightly different configuration formats - Basic configuration format: - holds configuration for a single plugin - typically has a `.conf` name suffix - has a `type` string field in the top-most structure - [examples](https://github.com/containernetworking/cni/blob/master/SPEC.md#example-configurations) - Configuration list format: - can hold configuration for multiple (chained) plugins - typically has a `.conflist` name suffix - has a `plugins` list field in the top-most structure - [examples](https://github.com/containernetworking/cni/blob/master/SPEC.md#network-configuration-lists) .debug[[k8s/cni.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/k8s/cni.md)] --- class: extra-details ## How plugins are invoked - Parameters are given through environment variables, including: - CNI_COMMAND: desired operation (ADD, DEL, CHECK, or VERSION) - CNI_CONTAINERID: container ID - CNI_NETNS: path to network namespace file - CNI_IFNAME: what the network interface should be named - The network configuration must be provided to the plugin on stdin (this avoids race conditions that could happen by passing a file path) .debug[[k8s/cni.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/k8s/cni.md)] --- ## In practice: kube-router - We are going to reconfigure our cluster (control plane and kubelets - kube-router will provide the "pod network" (connectivity with pods) - kube-router will also provide internal service connectivity (replacing kube-proxy) .debug[[k8s/cni.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/k8s/cni.md)] --- ## How kube-router works - Very simple architecture - Does not introduce new CNI plugins (uses the `bridge` plugin, with `host-local` for IPAM) - Pod traffic is routed between nodes (no tunnel, no new protocol) - Internal service connectivity is implemented with IPVS - Can provide pod network and/or internal service connectivity - kube-router daemon runs on every node .debug[[k8s/cni.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/k8s/cni.md)] --- ## What kube-router does - Connect to the API server - Obtain the local node's `podCIDR` - Inject it into the CNI configuration file (we'll use `/etc/cni/net.d/10-kuberouter.conflist`) - Obtain the addresses of all nodes - Establish a *full mesh* BGP peering with the other nodes - Exchange routes over BGP .debug[[k8s/cni.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/k8s/cni.md)] --- ## What's BGP? - BGP (Border Gateway Protocol) is the protocol used between internet routers - It [scales](https://www.cidr-report.org/as2.0/) pretty [well](https://www.cidr-report.org/cgi-bin/plota?file=%2fvar%2fdata%2fbgp%2fas2.0%2fbgp-active%2etxt&descr=Active%20BGP%20entries%20%28FIB%29&ylabel=Active%20BGP%20entries%20%28FIB%29&with=step) (it is used to announce the 700k CIDR prefixes of the internet) - It is spoken by many hardware routers from many vendors - It also has many software implementations (Quagga, Bird, FRR...) - Experienced network folks generally know it (and appreciate it) - It also used by Calico (another popular network system for Kubernetes) - Using BGP allows us to interconnect our "pod network" with other systems .debug[[k8s/cni.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/k8s/cni.md)] --- ## The plan - We'll update the control plane's configuration - the controller manager will allocate `podCIDR` subnets - we will allow privileged containers - We will create a DaemonSet for kube-router - We will restart kubelets in CNI mode - The DaemonSet will automatically start a kube-router pod on each node .debug[[k8s/cni.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/k8s/cni.md)] --- ## Getting the files .exercise[ - If you haven't cloned the training repo yet, do it: ```bash cd ~ git clone https://container.training ``` - Then move to this directory: ```bash cd ~/container.training/compose/kube-router-k8s-control-plane ``` ] .debug[[k8s/cni.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/k8s/cni.md)] --- ## Changes to the control plane - The API server must be started with `--allow-privileged` (because we will start kube-router in privileged pods) - The controller manager must be started with extra flags too: `--allocate-node-cidrs` and `--cluster-cidr` .exercise[ - Make these changes! (You might have to restart scheduler and controller manager, too.) ] .footnote[If your control plane is broken, don't worry! <br/>We provide a Compose file to catch up.] .debug[[k8s/cni.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/k8s/cni.md)] --- ## Catching up - If your control plane is broken, here is how to start a new one .exercise[ - Make sure the Docker Engine is running, or start it with: ```bash dockerd ``` - Edit the Compose file to change the `--cluster-cidr` - Our cluster CIDR will be `10.C.0.0/16` <br/> (where `C` is our cluster number) - Start the control plane: ```bash docker-compose up ``` ] .debug[[k8s/cni.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/k8s/cni.md)] --- ## The kube-router DaemonSet - In the same directory, there is a `kuberouter.yaml` file - It contains the definition for a DaemonSet and a ConfigMap - Before we load it, we also need to edit it - We need to indicate the address of the API server (because kube-router needs to connect to it to retrieve node information) .debug[[k8s/cni.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/k8s/cni.md)] --- ## Creating the DaemonSet - The address of the API server will be `http://A.B.C.D:8080` (where `A.B.C.D` is the public address of `node1`, running the control plane) .exercise[ - Edit the YAML file to set the API server address: ```bash vim kuberouter.yaml ``` - Create the DaemonSet: ```bash kubectl create -f kuberouter.yaml ``` ] .debug[[k8s/cni.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/k8s/cni.md)] --- ## Restarting kubelets - We don't need the `--pod-cidr` option anymore (the controller manager will allocate these automatically) - We need to pass `--network-plugin=cni` .exercise[ - Join the first node: ```bash sudo kubelet --kubeconfig ~/kubeconfig --network-plugin=cni ``` - Open more terminals and join the other nodes: ```bash ssh node2 sudo kubelet --kubeconfig ~/kubeconfig --network-plugin=cni ssh node3 sudo kubelet --kubeconfig ~/kubeconfig --network-plugin=cni ``` ] .debug[[k8s/cni.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/k8s/cni.md)] --- ## Check kuberouter pods - Make sure that kuberouter pods are running .exercise[ - List pods in the `kube-system` namespace: ```bash kubectl get pods --namespace=kube-system ``` ] If the pods aren't running, it could be: - privileged containers aren't enabled <br/>(add `--allow-privileged` flag to the API server) - missing service account token <br/>(add `--disable-admission-plugins=ServiceAccount` flag) .debug[[k8s/cni.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/k8s/cni.md)] --- ## Testing - Let's delete all pods - They should be re-created with new, correct addresses .exercise[ - Delete all pods: ```bash kubectl delete pods --all ``` - Check the new pods: ```bash kuectl get pods -o wide ``` ] Note: if you provisioned a new control plane, re-create and re-expose the deployment. .debug[[k8s/cni.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/k8s/cni.md)] --- ## Checking that everything works .exercise[ - Get the ClusterIP address for the service: ```bash kubectl get svc web ``` - Send a few requests there: ```bash curl `X.X.X.X`:8888 ``` ] Note that if you send multiple requests, they are load-balanced in a round robin manner. This shows that we are using IPVS (vs. iptables, which picked random endpoints). Problems? Check next slide! .debug[[k8s/cni.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/k8s/cni.md)] --- ## If it doesn't quite work ... - If we used kubenet before, we now have a `cbr0` bridge - This bridge (and its subnet) might conflict with what we're using now - To see if it's the case, check if you have duplicate routes with `ip ro` - To fix it, delete the old bridge with `ip link del cbr0` .debug[[k8s/cni.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/k8s/cni.md)] --- ## Troubleshooting - What if we need to check that everything is working properly? .exercise[ - Check the IP addresses of our pods: ```bash kubectl get pods -o wide ``` - Check our routing table: ```bash route -n ip route ``` ] We should see the local pod CIDR connected to `kube-bridge`, and the other nodes' pod CIDRs having individual routes, with each node being the gateway. .debug[[k8s/cni.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/k8s/cni.md)] --- ## More troubleshooting - We can also look at the output of the kube-router pods (with `kubectl logs`) - kube-router also comes with a special shell that gives lots of useful info (we can access it with `kubectl exec`) - But with the current setup of the cluster, these options may not work! - Why? .debug[[k8s/cni.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/k8s/cni.md)] --- ## Trying `kubectl logs` / `kubectl exec` .exercise[ - Try to show the logs of a kube-router pod: ```bash kubectl -n kube-system logs ds/kube-router ``` - Or try to exec into one of the kube-router pods: ```bash kubectl -n kube-system exec kube-router-xxxxx bash ``` ] These commands will give an error message that includes: ``` dial tcp: lookup nodeX on 127.0.0.11:53: no such host ``` What does that mean? .debug[[k8s/cni.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/k8s/cni.md)] --- ## Internal name resolution - To execute these commands, the API server needs to connect to kubelet - By default, it creates a connection using the kubelet's name (e.g. `http://node1:...`) - This requires our nodes names to be in DNS - We can change that by setting a flag on the API server: `--kubelet-preferred-address-types=InternalIP` .debug[[k8s/cni.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/k8s/cni.md)] --- ## Another way to check the logs - We can also ask the logs directly to the container engine - First, get the container ID, with `docker ps` or like this: ```bash CID=$(docker ps -q \ --filter label=io.kubernetes.pod.namespace=kube-system \ --filter label=io.kubernetes.container.name=kube-router) ``` - Then view the logs: ```bash docker logs $CID ``` .debug[[k8s/cni.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/k8s/cni.md)] --- class: extra-details ## Other ways to distribute routing tables - We don't need kube-router and BGP to distribute routes - The list of nodes (and associated `podCIDR` subnets) is available through the API - This shell snippet generates the commands to add all required routes on a node: ```bash NODES=$(kubectl get nodes -o name | cut -d/ -f2) for DESTNODE in $NODES; do if [ "$DESTNODE" != "$HOSTNAME" ]; then echo $(kubectl get node $DESTNODE -o go-template=" route add -net {{.spec.podCIDR}} gw {{(index .status.addresses 0).address}}") fi done ``` - This could be useful for embedded platforms with very limited resources (or lab environments for learning purposes) .debug[[k8s/cni.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/k8s/cni.md)] --- # Interconnecting clusters - We assigned different Cluster CIDRs to each cluster - This allows us to connect our clusters together - We will leverage kube-router BGP abilities for that - We will *peer* each kube-router instance with a *route reflector* - As a result, we will be able to ping each other's pods .debug[[k8s/cni.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/k8s/cni.md)] --- ## Disclaimers - There are many methods to interconnect clusters - Depending on your network implementation, you will use different methods - The method shown here only works for nodes with direct layer 2 connection - We will often need to use tunnels or other network techniques .debug[[k8s/cni.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/k8s/cni.md)] --- ## The plan - Someone will start the *route reflector* (typically, that will be the person presenting these slides!) - We will update our kube-router configuration - We will add a *peering* with the route reflector (instructing kube-router to connect to it and exchange route information) - We should see the routes to other clusters on our nodes (in the output of e.g. `route -n` or `ip route show`) - We should be able to ping pods of other nodes .debug[[k8s/cni.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/k8s/cni.md)] --- ## Starting the route reflector - Only do this slide if you are doing this on your own - There is a Compose file in the `compose/frr-route-reflector` directory - Before continuing, make sure that you have the IP address of the route reflector .debug[[k8s/cni.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/k8s/cni.md)] --- ## Configuring kube-router - This can be done in two ways: - with command-line flags to the `kube-router` process - with annotations to Node objects - We will use the command-line flags (because it will automatically propagate to all nodes) .footnote[Note: with Calico, this is achieved by creating a BGPPeer CRD.] .debug[[k8s/cni.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/k8s/cni.md)] --- ## Updating kube-router configuration - We need to pass two command-line flags to the kube-router process .exercise[ - Edit the `kuberouter.yaml` file - Add the following flags to the kube-router arguments: ``` - "--peer-router-ips=`X.X.X.X`" - "--peer-router-asns=64512" ``` (Replace `X.X.X.X` with the route reflector address) - Update the DaemonSet definition: ```bash kubectl apply -f kuberouter.yaml ``` ] .debug[[k8s/cni.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/k8s/cni.md)] --- ## Restarting kube-router - The DaemonSet will not update the pods automatically (it is using the default `updateStrategy`, which is `OnDelete`) - We will therefore delete the pods (they will be recreated with the updated definition) .exercise[ - Delete all the kube-router pods: ```bash kubectl delete pods -n kube-system -l k8s-app=kube-router ``` ] Note: the other `updateStrategy` for a DaemonSet is RollingUpdate. <br/> For critical services, we might want to precisely control the update process. .debug[[k8s/cni.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/k8s/cni.md)] --- ## Checking peering status - We can see informative messages in the output of kube-router: ``` time="2019-04-07T15:53:56Z" level=info msg="Peer Up" Key=X.X.X.X State=BGP_FSM_OPENCONFIRM Topic=Peer ``` - We should see the routes of the other clusters show up - For debugging purposes, the reflector also exports a route to 1.0.0.2/32 - That route will show up like this: ``` 1.0.0.2 172.31.X.Y 255.255.255.255 UGH 0 0 0 eth0 ``` - We should be able to ping the pods of other clusters! .debug[[k8s/cni.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/k8s/cni.md)] --- ## If we wanted to do more ... - kube-router can also export ClusterIP addresses (by adding the flag `--advertise-cluster-ip`) - They are exported individually (as /32) - This would allow us to easily access other clusters' services (without having to resolve the individual addresses of pods) - Even better if it's combined with DNS integration (to facilitate name → ClusterIP resolution) .debug[[k8s/cni.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/k8s/cni.md)] --- class: title, talk-only What's missing? .debug[[lisa/end.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/lisa/end.md)] --- ## What's missing? - Mostly: security - Notably: RBAC - Also: availabilty .debug[[lisa/end.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/lisa/end.md)] --- ## TLS! TLS everywhere! - Create certs for the control plane: - etcd - API server - controller manager - scheduler - Create individual certs for nodes - Create the service account key pair .debug[[lisa/end.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/lisa/end.md)] --- ## Service accounts - The controller manager will generate tokens for service accounts (these tokens are JWT, JSON Web Tokens, signed with a specific key) - The API server will validate these tokens (with the matching key) .debug[[lisa/end.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/lisa/end.md)] --- ## Nodes - Enable NodeRestriction admission controller - authorizes kubelet to update their own node and pods data - Enable Node Authorizer - prevents kubelets from accessing data that they shouldn't - only authorize access to e.g. a configmap if a pod is using it - Bootstrap tokens - add nodes to the cluster safely+dynamically .debug[[lisa/end.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/lisa/end.md)] --- ## Consequences of API server outage - What happens if the API server goes down? - kubelet will try to reconnect (as long as necessary) - our apps will be just fine (but autoscaling will be broken) - How can we improve the API server availability? - redundancy (the API server is stateless) - achieve a low MTTR .debug[[lisa/end.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/lisa/end.md)] --- ## Improving API server availability - Redundancy implies to add one layer (between API clients and servers) - Multiple options available: - external load balancer - local load balancer (NGINX, HAProxy... on each node) - DNS Round-Robin .debug[[lisa/end.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/lisa/end.md)] --- ## Achieving a low MTTR - Run the control plane in highly available VMs (e.g. many hypervisors can do that, with shared or mirrored storage) - Run the control plane in highly available containers (e.g. on another Kubernetes cluster) .debug[[lisa/end.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/lisa/end.md)] --- class: title Thank you! .debug[[lisa/end.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/lisa/end.md)] --- ## A word from my sponsor - If you liked this presentation and would like me to train your team ... Contact me: jerome.petazzoni@gmail.com - Thank you! ♥️ - Also, slides👇🏻  .debug[[lisa/end.md](https://github.com/jpetazzo/container.training/tree/lisa-2019-10/slides/lisa/end.md)]